In-Band Full-Duplex Technology Improves Wireless Communications

According to a recent report by research firm Gartner, the rise of IoT and the wireless industry is set to boost the number of mobile devices in-use globally, to more than 21 billion by 2020. The widespread use of mobile devices already creates a significant demand on the cellular systems that supports all this wireless connectivity, especially at locations, such as an outdoor concert or a sports arena, where large numbers of users may be simultaneously connecting.

The ability of current-era cellular technology, or even the proposed next-generation 5G technology, will be severely strained to provide the high data rates and wide-area communication range needed to support the escalating device usage. The communications community, in-turn, has been looking at in-band full-duplex (IBFD) technology to increase the capacity and the number of supported devices by allowing the devices to transmit and receive on the same frequency at the same time. This ability not only doubles the devices’ efficiency within the frequency spectrum, but also reduces the time for a message to be processed between send and receive modes.

Now, in a recently published article in the IEEE Transactions on Microwave Theory and Techniques, In-Band Full-Duplex Technology: Techniques and Systems Survey, MIT Lincoln Laboratory researchers from the RF Technology Group – Kenneth Kolodziej, Bradley Perry, and Jeffrey Herd – assessed the capabilities of more than 50 representative IBFD systems. And they concluded that IBFD technology incorporated into wireless systems indeed enhanced the systems’ ability to operate in today’s congested frequency spectrum and increase the efficient use of the spectrum.

However, the authors’ cautioned that the potential of IBFD for wireless communications can only be realized if system designers develop techniques to mitigate the self-interference generated by simultaneously transmitting and receiving on the same frequency. The IBFD systems developed so far are limited in the range they can achieve and the number of devices they can accommodate because they rely on antennas that radiate omni-directionally.

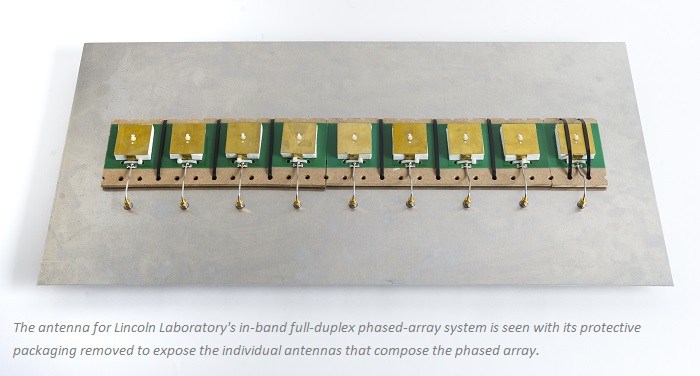

Lincoln Laboratory researchers have thus demonstrated new IBFD technology that for the first time can operate on phased-array antennas. According to researcher, Kenneth Kolodziej, phased arrays can direct communication traffic to targeted areas, thereby expanding the distances that the RF signals reach and significantly increasing the number of devices that a single node can connect.

Handling the Self-Interference Challenge

The research team, led by Kolodziej, Perry, and Jonathan Doane, addressed the self-interference problem through a combination of adaptive digital beamforming to reduce coupling between transmit and receive antenna beams and adaptive digital cancellation to further remove the residual self-interference. According to Kolodziej, the self-interference elimination is particularly challenging within a phased array because the close proximity of the antennas results in higher interference levels. This interference becomes even more difficult as transmit powers exceed half of a watt because distortion and noise signals are generated and must also be removed for successful implementation.

Phased-array antennas can use beamforming to dynamically change the shape of the antenna pattern to either focus or reduce energy in a specific direction. For the laboratory’s novel system, transmit digital beamforming is used to minimize the total interference signal at each receiving antenna, and receive beamforming enables the system to minimize the self-interference accepted from each transmitter.

In digital beamforming, the phased array is partitioned into a transmitting section of antennas and an adjacent receiving section. Each antenna in the array may be assigned to either function, and the size and geometry of the transmit and receive zones can be modified to support various antenna patterns and functions required by the overall system, while also being tailored to the system location.

Even after the interference reduction provided by digital beamforming, a significant amount of noise, as well as residual transmitted signal, will remain in the received signal. Traditional digital cancellation techniques can cancel the residual transmitted signal but cannot eliminate noise. To solve this problem, the Lincoln Laboratory team coupled the output of each active transmit channel to the (otherwise unused) receive channel for that antenna. Then, by using a measured reference copy of the transmitted waveform, an adaptive cancellation algorithm can filter out the transmit signal, distortion, and noise, leaving the un-corrupted received signal.

Suppressing residual transmit signals and extraneously incurred noise improves the reception of wireless signals from devices operating on the same frequency, effectively increasing the number of devices that can be supported and their data rates. The researcher envisioned this IBFD operation within a phased-array system as a novel paradigm that may lead to significant performance improvements for next-generation wireless systems.

Predicted Improvements in Wireless Service

Through in-laboratory assessments of how Lincoln Laboratory’s proposed system compares to current cellular technology and state-of-the-art IBDF systems, the research team estimates that the phased-array antenna system with IBFD capability can support 100 times more devices and 10 times higher data rates than the currently used 4G LTE (fourth-generation long-term evolution) standard for wireless communications. Moreover, the phased-array system can achieve an extended communication range of 60 miles, which is more than 2.5 times greater than the next-best system.

Because phased-array antenna systems utilize multiple antennas to focus radiation and perform beamforming operations, Lincoln Laboratory’s system is slightly larger than the single-antenna system planned for the 5G NR (fifth-generation new radio) — 1.5 square feet versus 1 square foot. However, either antenna size should be accommodated by most base stations.

According to research team member Jonathan Doane, the significant improvements offered by Lincoln Laboratory’s system, overall, could provide future wireless users with cutting-edge experiences that include connecting more devices inside their smart homes as well as maintaining high data rates in large crowds, both of which are impossible with current technology.

Click here to read the published article.